While Scrapy is super useful, sometimes it could be a little stifling to create a project and then a spider and all the settings that go with it for a simple one-page web crawling task.

While Beautiful soup, along with the requests module will do the trick, if you want the power of Scrapy, then it's easier if it can be run standalone.

It is possible to run Scrapy as a standalone script. We use this at xxx all the time for our internal side projects.

It is also beneficial to run it directly sometimes because this can be loaded as a part of a larger system. We use it along with Beanstalk to run jobs in the background.

You will have to use the CrawlerProcess module to do this. The code goes something like this.

from scrapy.crawler import CrawlerProcess

c = CrawlerProcess({

'USER_AGENT': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/34.0.1847.131 Safari/537.36',

'FEED_FORMAT': 'csv',

'FEED_URI': 'output.csv',

'DEPTH_LIMIT': 2,

'CLOSESPIDER_PAGECOUNT': 3,

})

c.crawl(QuotesSpider, urls_file='input.txt')

c.start()

As you can see, we are starting the crawler inside Python code and are passing arguments that we would normally pass from the command line like external filenames and user-agent strings.

Let's take a simple scrapy crawler that crawls quotes and see if we can make it run standalone…

import scrapy

from scrapy.spiders import CrawlSpider, Rule

from bs4 import BeautifulSoup

class QuotesSpider(CrawlSpider):

name = "quotes"

def __init__(self, urls_file, *a, **kw):

super(QuotesSpider, self).__init__(*a, **kw)

def start_requests(self):

urls = [

'http://quotes.toscrape.com/page/1/',

'http://quotes.toscrape.com/page/2/',

]

for url in urls:

yield scrapy.Request(url=url, callback=self.parse)

def parse(self, response):

page = response.url.split("/")[-2]

filename = 'quotes-%s.html' % page

with open(filename, 'wb') as f:

f.write(response.body)

self.log('Saved file %s' % filename)

from scrapy.crawler import CrawlerProcess

c = CrawlerProcess({

'USER_AGENT': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/34.0.1847.131 Safari/537.36',

'FEED_FORMAT': 'csv',

'FEED_URI': 'output.csv',

'DEPTH_LIMIT': 2,

'CLOSESPIDER_PAGECOUNT': 3,

})

c.crawl(QuotesSpider, urls_file='input.txt')

c.start()

Notice how you will have to override the init function to accept custom arguments.

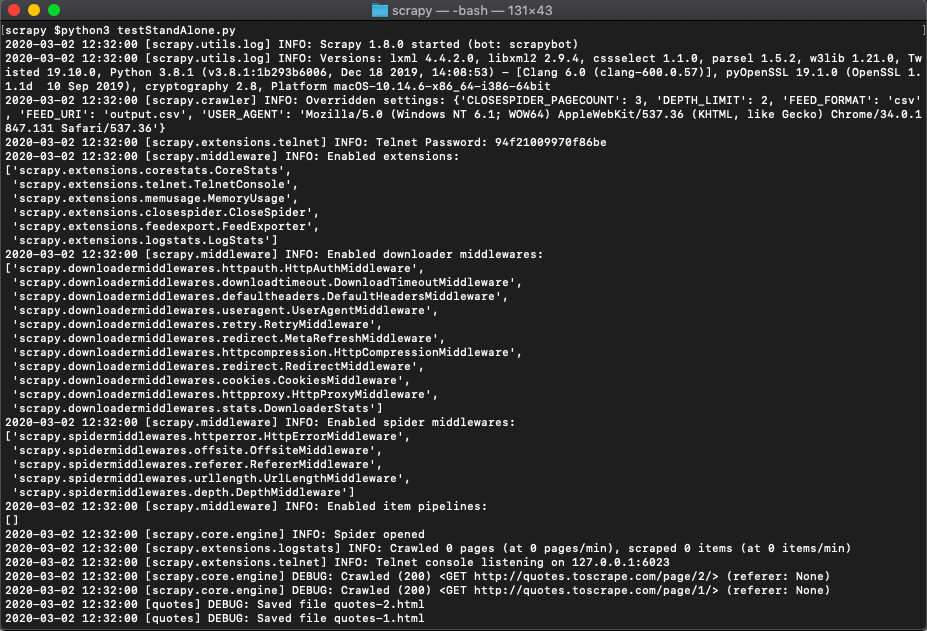

You save this as testStandAlone.py and run it like this.

python3 testStandAlone.pyYou will get the results.